Modern data warehouses, data lakes, lakehouse - generically referred to as a data platform here, which includes products such as Snowflake, Databricks, AWS Redshift, and Google BigQuery are helping organizations to process petabytes of data with unprecedented speed and flexibility. The use cases solved cover a wide range, including legacy data platform modernization, analytics, business intelligence, ML & AI workloads, personalization, vertical applications.

Optimizing these data platforms for cost, performance, and reliability remains a formidable challenge. Below, we compare the complexities of managing these platforms, emphasizing cost unpredictability, query tuning, data governance, talent shortages, and the relentless pace of platform evolution.

1. Cost Management: The “Iceberg” of Cloud Data Platforms

Snowflake’s Credit Conundrum

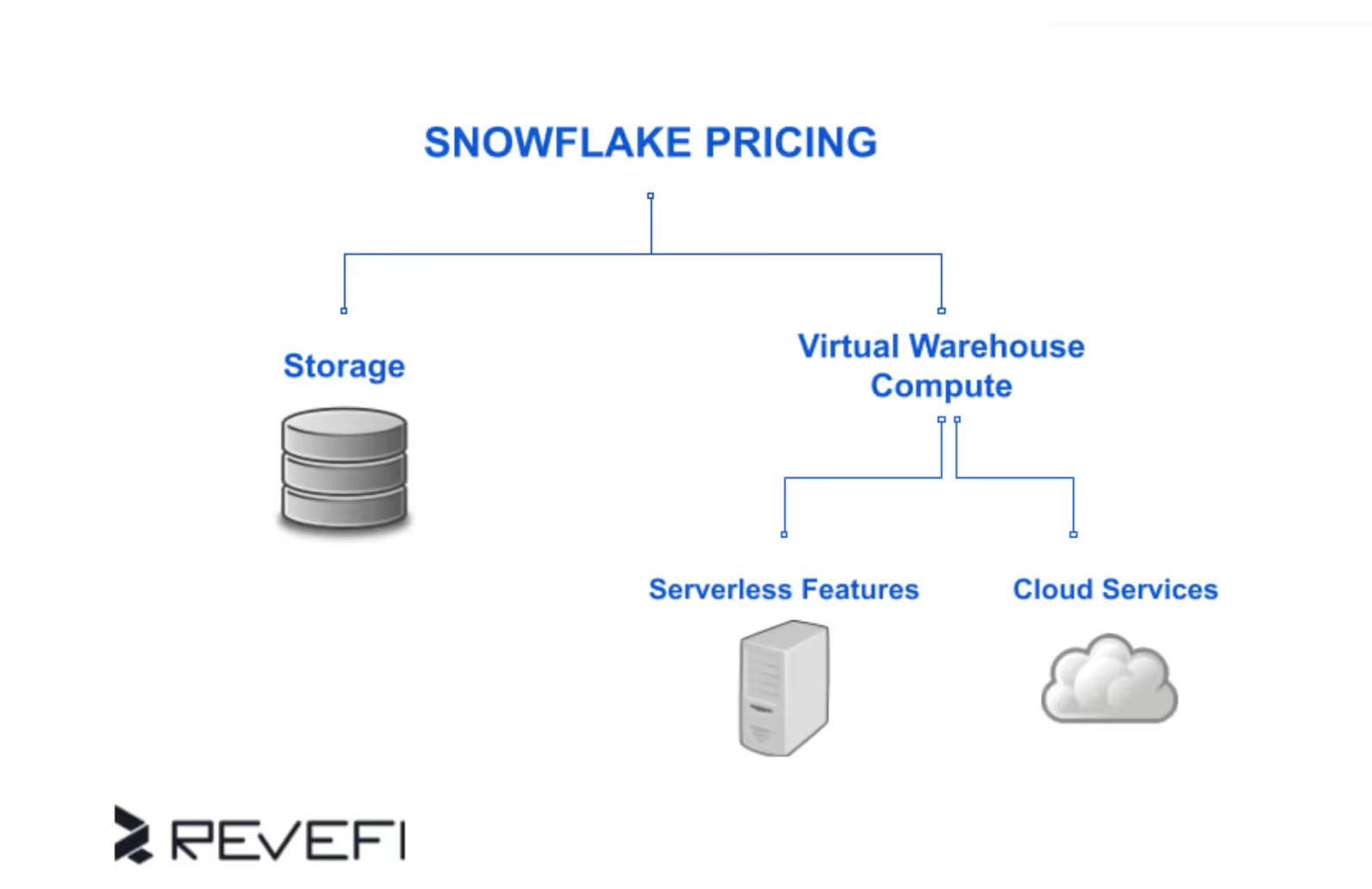

Snowflake’s consumption-based pricing model charges users for compute resources (virtual warehouses) and storage.

While its separation of storage and compute offers flexibility, improper data warehouse sizing leads to runaway costs. For example:

- Data Warehouse Oversizing: Running a 4X-Large warehouse (128 credits/hour) for simple queries that an X-Small (1 credit/hour) could handle wastes 99% of compute resources.

- Auto-Suspend Pitfalls: Setting auto-suspend too aggressively (e.g., 60 seconds) forces frequent warehouse restarts, incurring cold-start penalties. Queries against uncached data may take 3–5x longer, negating savings.

- Multi-Cluster Tradeoffs: While multi-cluster data warehouses prevent query queuing, each additional cluster duplicates credit consumption. A 4-cluster setup quadruples costs during peak loads.

A common mitigation is to adjust to identify oversized warehouses and optimize them. For example,

- Use Snowflake’s

WAREHOUSE_LOAD_HISTORYto identify oversized warehouses.

Implement workload-specific warehouses (e.g., BI vs. ETL) with tiered auto-suspend thresholds.

Databricks’ DBU Dilemma

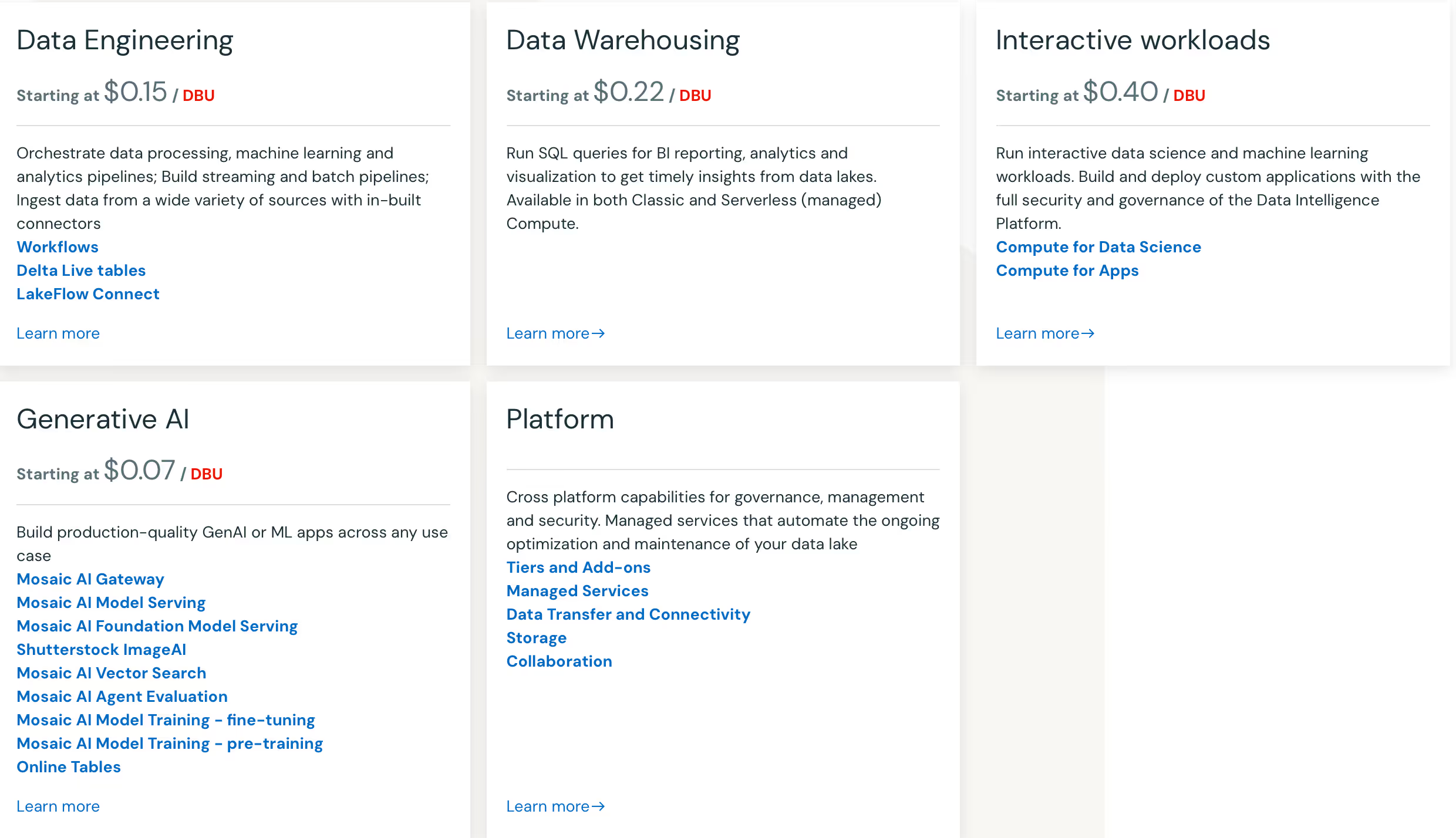

Databricks’ pricing is based on Databricks Units (DBUs), which vary by workload type (SQL, ETL, ML).

Common missteps include:

- Cluster Overprovisioning: Selecting memory-optimized instances (e.g.,

r5.4xlarge) for CPU-bound Spark jobs wastes 40–60% of memory resources. - Photon Engine Limitations: While Photon accelerates vectorized queries, it doesn’t support UDFs written in Python, forcing fallback to legacy executors.

Mitigation involves the following.

- Profile Spark jobs using Databricks’

Spark UIto right-size executors and partitions. - Enable Adaptive Query Execution (AQE) to dynamically coalesce small partitions (saving 15–30% runtime).

AWS Redshift’s Node Optimization Challenge

Redshift’s legacy DS2 nodes couple storage and compute, forcing users to overprovision for storage needs. Migrating to RA3 nodes (which decouple storage) may reduce costs but introduces new complexities:

- Concurrency Scaling Costs: Each auto-scaled cluster adds $0.25/hour, potentially inflating monthly bills by 20% during volatile workloads.

- Compression Overheads: Poor column encoding (e.g., using

RAWinstead ofBYTEDICT) will increase storage costs by 3–5x.

Potential mitigation involves the following.

- Use Redshift’s

ANALYZE COMPRESSIONto apply optimal encodings. - Limit concurrency scaling to 2 hours/day via

aws redshift modify-usage-limit.

BigQuery’s Partitioning Paradox

BigQuery’s on-demand pricing ($6.25/TB scanned) penalizes unoptimized queries:

- Unpartitioned Tables: Scanning 100 TB of historical data for a daily report costs $625/query. Partitioning by

datereduces scans to 1 TB ($6.25/query). - Nested Data Inflation: Repeated fields in nested JSON bloat storage by 70–120%, increasing scan costs.

Potential mitigation involves the following.

- Materialize nested fields into separate tables using

CREATE TABLE ... AS SELECT - Leverage BI Engine to cache frequent aggregates (reducing scan volume by 90%).

2. Performance Tuning: Battling Latency at Scale

Snowflake’s Spillage and Caching Tradeoffs

When queries exceed memory limits, Snowflake spills intermediate results to SSDs, slowing execution by 5–10x. Key indicators:

- Local Spillage: Occurs when per-node memory is exhausted. Upgrading from X-Small to Small warehouses reduces spillage by 80%.

- Remote Spillage: Data exceeds cluster cache, forcing retrievals from S3. Enabling the Query Acceleration Service cuts spillage via serverless preprocessing.

Potential mitigation involves the following.

- Monitor spillage via

QUERY_HISTORYand scale data warehouses proportionally. - Pre-warm caches by running dummy queries before critical jobs.

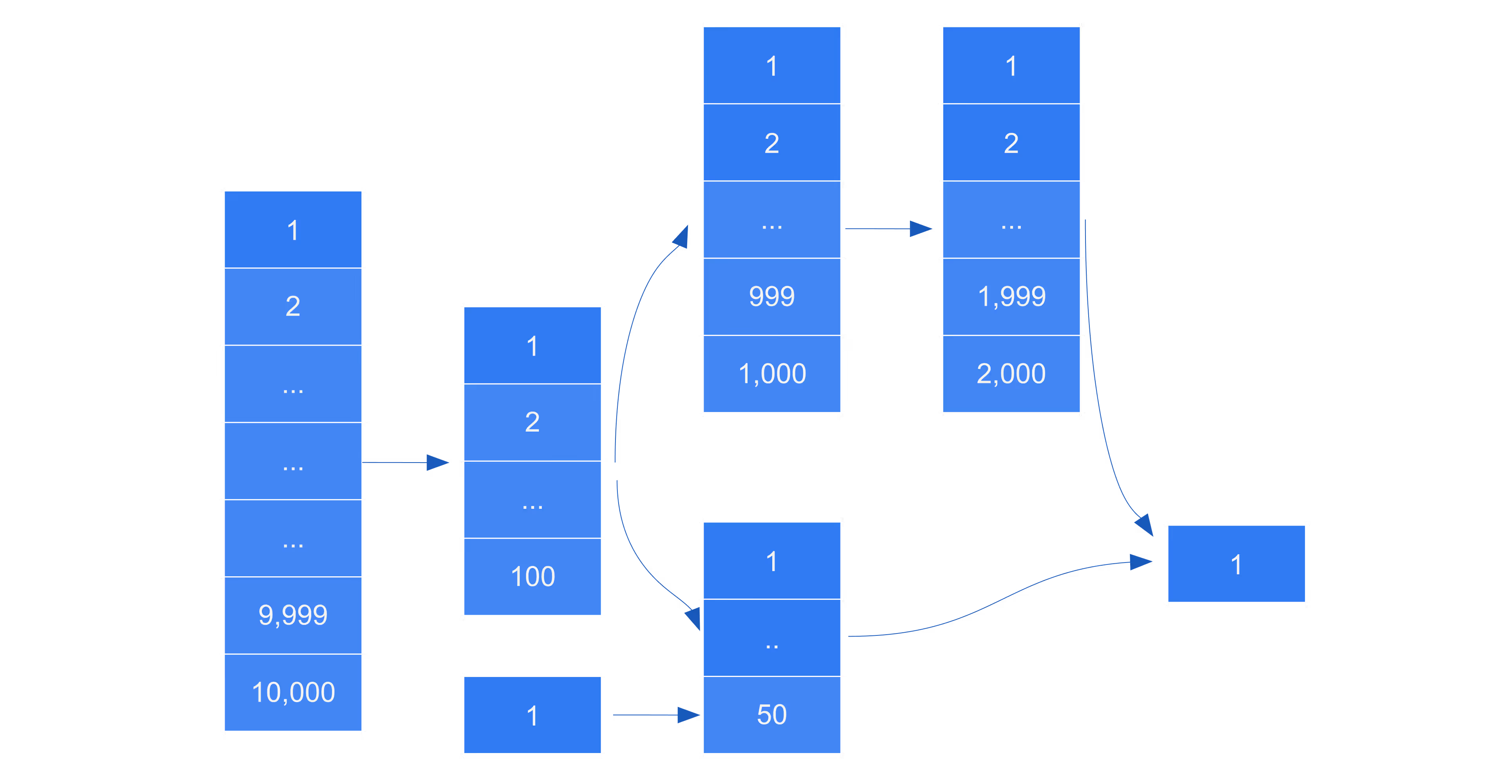

Databricks’ Skew and Shuffle Limitations

Data skew in Spark JOINs causes straggler tasks, while excessive shuffling (e.g., GROUP BY on unpartitioned columns) slows jobs by 40%:

- Skew Remediation: Use

REPARTITIONto evenly distribute hot keys. - Shuffle Optimization: Cache shuffled data with

spark.shuffle.service.enabled true.

Potential mitigation involves using Photon Engine gains which:

- Accelerates vectorized scans by 2–4x but requires Parquet-formatted Delta tables.

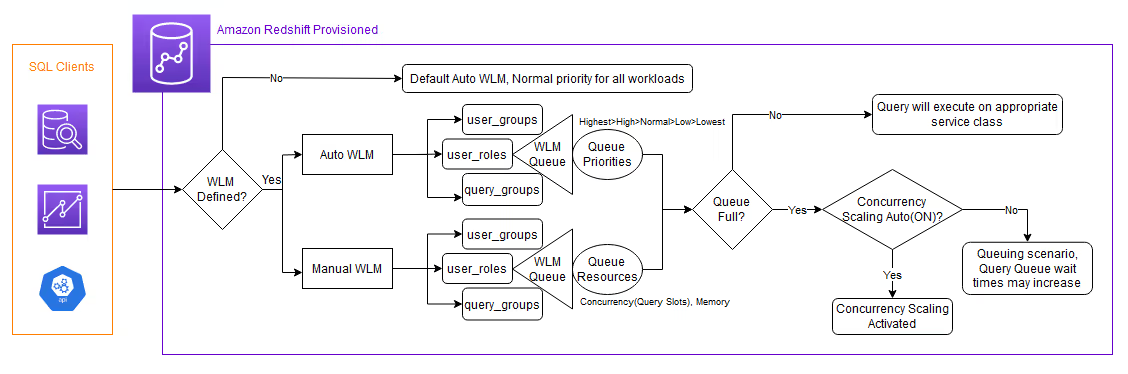

Redshift’s WLM and Zone Map Pitfalls

Redshift’s Workload Management (WLM) queues throttle concurrency:

- Queue Overflows: Allocating only 5 slots to ETL jobs causes 30% query timeouts during peak hours.

- Zone Map Gaps: Unsorted tables render zone maps ineffective, increasing I/O by 70%.

Potential mitigation involves the following.

- Sort fact tables by

timestampandtenant_idto optimize zone maps. - Enabling Short Query Acceleration for sub-second queries.

BigQuery’s Slot Contention

BigQuery’s slot-based execution risks under provisioning:

- On-Demand Limits: Default 2,000 slots struggle with 100+ concurrent users, causing 15–30s delays.

- Flat-Rate Inefficiency: Paying for 2,000 slots ($2,000/month) but using only 20% wastes $1,600/month.

You can mitigate this by:

- Use of

RESERVATIONSto allocate slots to critical workloads. - Optimize JOIN order to reduce slot usage (e.g., filter before JOINING).

3. Data Quality and Governance: The Silent Crisis

Schema Drift Across Platforms

- Snowflake: Schema-on-read allows JSON ingestion but risks type mismatches (e.g.,

stringvs.timestamp). - Databricks: Delta Lake’s schema enforcement blocks invalid DMLs but requires manual MERGE updates.

- BigQuery: Automatic schema detection misinfers nested JSON types in 12% of cases.

Potential mitigation involves the following.

- Enforce data contracts with

dbttests. - Use Databricks’

DeltaLiveTablesfor schema validations.

Metadata Fragmentation

- Redshift:

STL_QUERYlogs lack lineage context, forcing manual tracebacks.

Snowflake:ACCESS_HISTORYtracks column access but not transformation logic.

You can reduce fragmentation by the following.

- Integrating OpenLineage with Databricks and AWS Glue for cross-platform lineage.

4. Talent and Operational Challenges

Skill Gaps

- Platform Expertise: Optimizing Snowflake’s Search Optimization Service requires understanding micro-partition pruning, a niche skill.

- Language Divide: Spark experts often lack SQL tuning skills for Redshift’s WLM.

Update Fatigue

- Snowflake: Quarterly updates (e.g., Hybrid Tables) deprecate legacy features like temporary tables.

- Databricks: Photon Engine updates break Python UDFs, requiring quarterly refactoring.

Potential mitigation involves the following.

- Partner with MSPs for upgrade testing.

- Automate regression testing with dbt and other tools.

- Augment with modern AI solutions that are optimized for data management

- Upskill your team with specific AI powered solution, such as Revefi

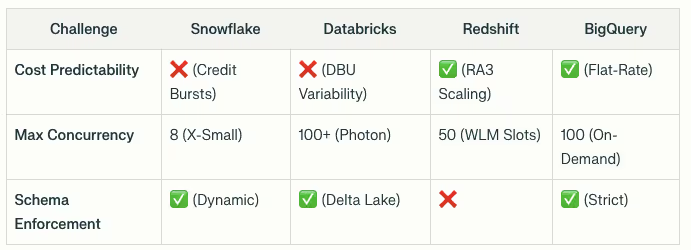

5. Comparative Analysis

Conclusion

Optimizing modern data warehouses demands continuous learning, adapting and more which continues to be time consuming and often error prone.

- Cost Controls: Rightsize resources, leverage spot instances (Databricks), and automate suspension.

- Performance: Profile spills/skew, optimize partitions, and use platform-specific accelerators (Photon, QAS).

- Governance: Enforce schemas via Delta Lake/BigQuery contracts and automate lineage.

- Talent: Cross-train teams on SQL/Spark/other and adopt CI/CD for upgrades.

As platforms evolve, data teams continue to struggle to keep up. There is a clear need to augment data teams to keep up with the advances, accuracy and timely responses. GenAI based solutions such as Revefi serve as a good starting point.